Website Technical And On Page SEO Audit

By: Joseph de Souza

A Website SEO audit is necessary to find out the shortcomings in a website and correct them if required. This is meant to give you an insight into the audit process and also list out the procedures of how a website should be examined before the start of the SEO process. This will also be useful as a SEO checklist for anyone wanting to setup their website. An SEO audit forms the starting point in any SEO package.

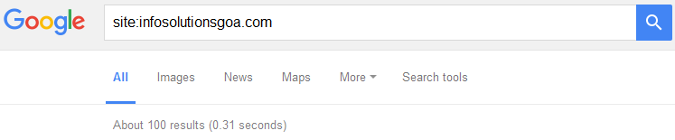

Website Indexing

The first thing we have to check in an SEO audit is the indexing of the website. We can do this by using the site: operator in Google and Bing . e.g. site:infosolutionsgoa.com . It will show approximately how many pages are indexed. The numbers will usually vary and in almost all cases Google will show a higher number of indexed pages. For example running a site: search on my website yields 100 pages in Google and 84 pages in Bing.

The next thing to check out for is to see which page appears on top of the list. This is usually the homepage. you can also check if any test subdomain is indexed.

During the course of my SEO work, I have come across test subdomains that were indexed. Whenever a developer sets up a website for developing a new website, testing a new feature or testing the software upgrade, it is of paramount importance to use noindex, nofollow in the robots meta tag.

However many a times even highly experienced developers forget to do this. This results in duplicate content.

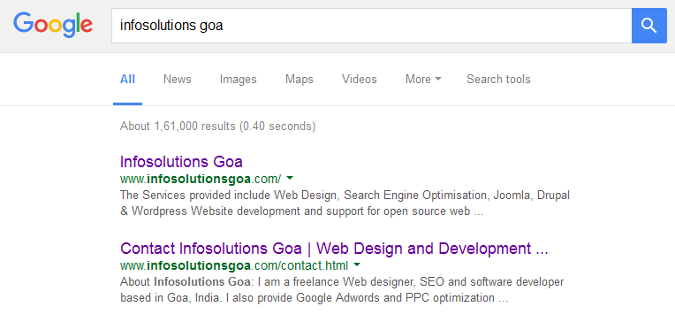

Search For Brand Name

Next search for the name of the brand or name of website and check if the home page appears on top. Unless the website is very new or is a very generic term , the brand's website should appear at the top. If not, there could be a problem with the website, such as a heavy algorithmic or manual penalties.

.

You can also try searching for the exact home page URL like mywebsite.com and in case of no penalty the website has to appear on top of the search results

Website Page Structure

View the page source from the browser and look out for potential errors multiple H1, multiple title tags, hidden text etc. You could uncover a lot of potential issues here.

For example the popular Wordpress theme Twentythirteen generates multiple H1 tags - one is in the header (on top of the header image) and one the post title. In case you have an additional heading in the post, it will be 3 H1 tags which is not a recommended practice.

Pay particular attention to the home page and a few key pages. Highlight the page and see for any evidence of hidden text.

You can also turn off CSS using a tool like Moz bar to uncover hidden text.

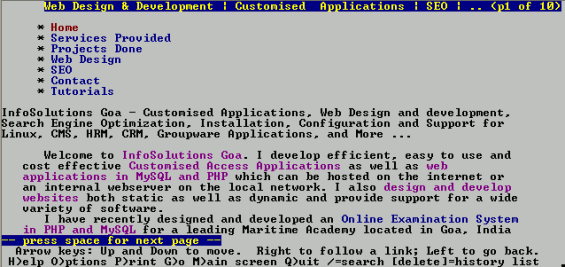

See if ALT tags are present and accurate. Also check for H1, H2 and H3 tags and their contents.

The correct way to use the H tags is one H1 tag for the primary title and H2 tags for each main sub topic. You can also have a look at the cached version of the page in Google's index and also view the page in a text only browser such as Lynx.

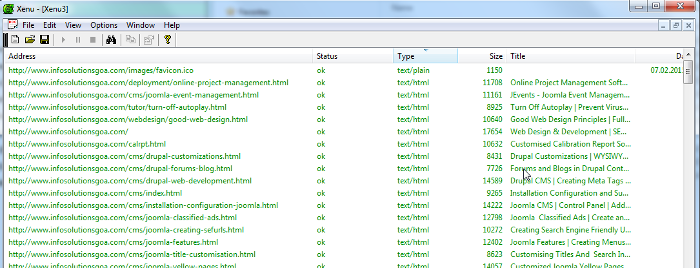

Site Crawl

The next item is crawling the website with a web crawler tool like Screaming Frog (the free version allows you to crawl upto 500 pages) or Xenu. You can uncover a lots of information like dead internal and external links, accessibility problems, missing titles etc.

It is also recommended to use a site map builder tool like xml site maps and see if it is able to crawl the website.

It is also recommended to use a site map builder tool like xml site maps and see if it is able to crawl the website.

Website Speed Analysis

Users will leave your website if it loads too slowly. Several studies have proved that 40% of people leave a website that takes more than 3 seconds to load. Google's recommended page load speed is 1.4 seconds or less. Speed of a website can be increased by enabling compression, optimizing images, minimizing CSS and javascript, Leverage browser caching and reducing the number of HTTP requests. If you have many CSS files try to combine them into a single file or at the very least reduce their number. Images have to be optimized for minimum file size without compromising on quality.

Changes have to made to the .htaccess file in order to enable compression as well as leverage browser caching.

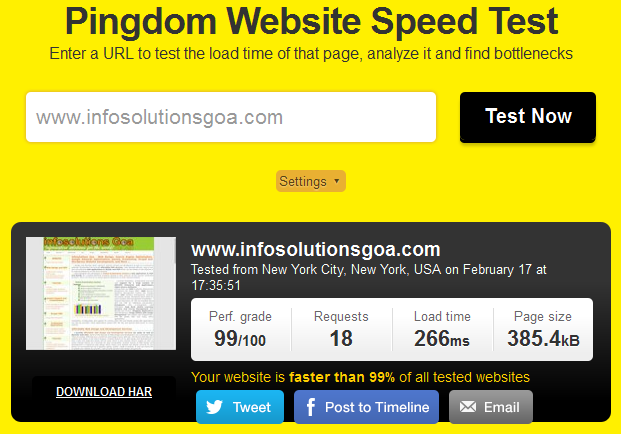

For still further speed improvement you can use a CDN (Content Distribution Network). For testing a website's speed I use pingdom tools. I made the above changes to my website and it now loads in less than one second, infact my home page now loads in only 266ms.

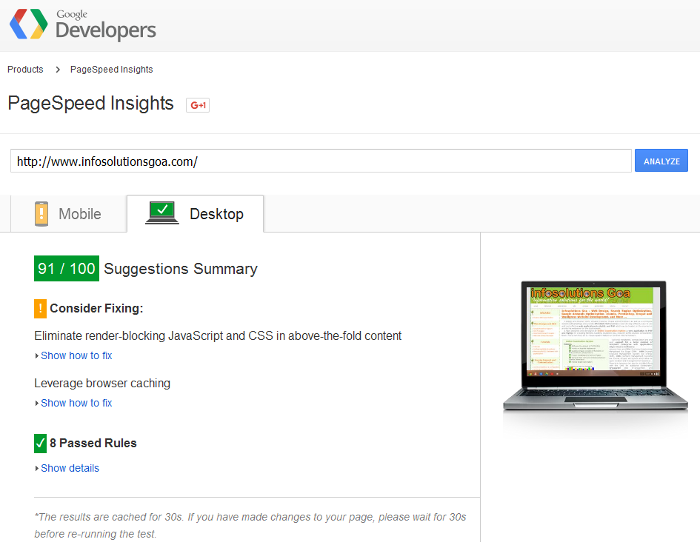

Another useful tool for website speed analysis is Google PageSpeed Insights. Google Page speed insights will also provide you a download option where you can download optimized images for the page under test. You can then replace the images on your website with these after backing up the original images.

Checking Robots.txt File and Sitemap.xml

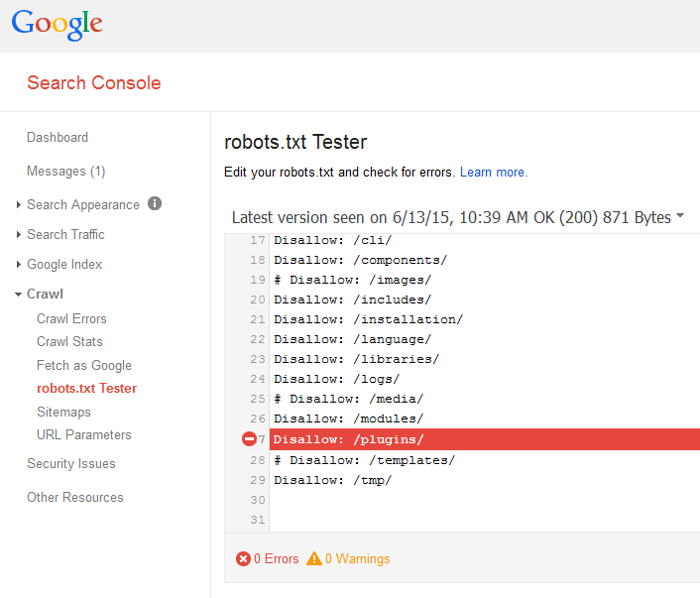

The robots.txt has to be checked in every seo audit. It is because many resource files such as css, images and javascript can be accidenatlly blcked with disastrous consequences. I even know of many websites that had put Disallow: / in their robots.txt file. This basically means to tell the search engines not to index your website. You can check the file directly or via webmaster tools, now called Google Search Console.

It is also wise to check if there is a xml sitemap present in the website. This file is usually present at http://www.yourwebsite.com/sitemap.xml. If you have webmaster tools access you can also check whether it is submitted.

You can also add your sitemap location to your robots.txt file to facilitate automatic discovery of your sitemap.xml file by the search engines. This is achieved by adding the sitemap directive above the user-agent directive as shown below:

Sitemap: http://www.yourwebsite.com/sitemap.xml

User-agent:*

Website Architecture

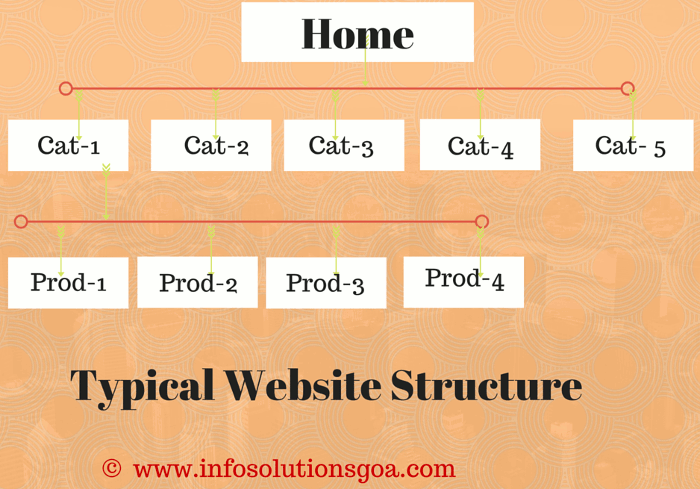

The site has to be structured in such a way that any page can be reached by at the most 3 clicks. For example an ecommerce site has to be structured so that the home page leads to the category pages and each category page leads to the product pages. For a large website there could be a subcategory as well.

Some things worth checking here:

Is the website static or does it use a CMS (Content Management System)?

Many popular content management system have category tags which generate a page displaying all posts or pages which have that tag. This leads to hundreds of thin content pages, a potential target for the Panda Algorithm.

One popular site dealing in sailing equipment was hit because they did exactly this. Incidentally they were using the very popular Wordpress CMS and had created hundreds of pages by using category tags. They finally managed to set noindex on their meta robots metatag for all their category pages and their rankings were restored.

Another thing to keep in mind is that if you use a CMS, it has to be updated with the latest updates. It is a good practice to check this also during an SEO Audit, as any security issues on your website may affect the ranking.

My advice is use noindex, follow for these type of category pages.

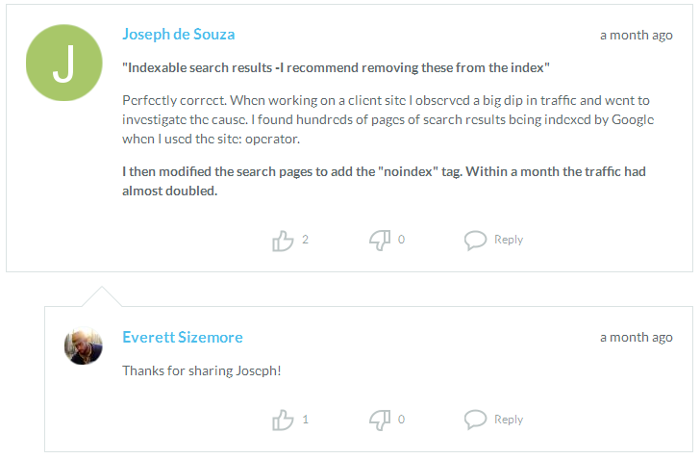

Another point to consider, specially when working on ecommerce websites is to check whether the internal search pages are getting indexed. If they are it is best to modify the search pages by adding the "noindex" tag.

I had first hand experience of this when working on a client site. I had noticed a sudden dip in traffic and went to investigate the cause. I found hundreds of pages of search results being indexed when I used the site: operator. After a month of adding the "noindex" tag to the search pages the traffic had almost doubled.

There is also the case of custom built CMS.

There is also the case of custom built CMS.

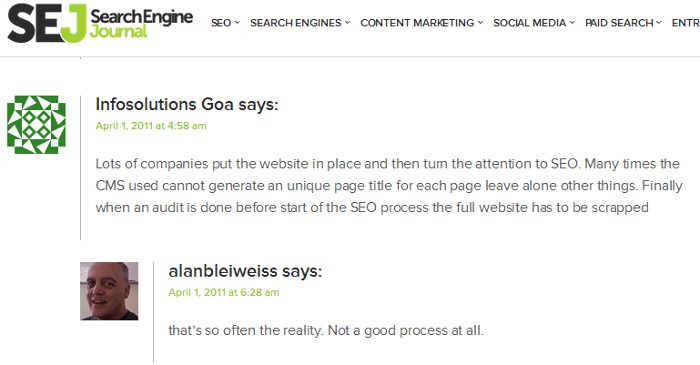

Many of them do not even have the ability to add an unique title and description meta tag for each page. A website built on such a CMS have to be basically scrapped and a new website will have to be built, if the company wants to achieve anything in SEO.

Well known SEO Expert Alan Bleiweiss, Forensic SEO audit consultant with audit client sites consisting of upwards of 50 million pages and tens of millions of visitors a month agreed with me when I stated this in Search Engine Journal, a highly reputed SEO website. In that article, Alan put forward strong arguments of why SEO Audits are Needed Before Sites are Built.

File and URL Names

It is a good practice to have relevant keywords in the url separated by dashes (‐). The words should be restricted to around 4 to 5. In case you are using a CMS, check if it has the facility of being able to set custom urls.

I personally prefer to add .html at the end of the url so that it creates the impression of a static page even when using a content management system. It is also very advisable that your CMS does not add any session IDs or excessive parameters.

Best Practices For Domain Names

The best practices for domain name are short (preferably 15 characters or less), memorable and no hyphens or numbers in it. If you are starting a new site or your site is just started with little traffic it is best not to have them but if you have a well known site with a reasonable amount of traffic you should not migrate to a new domain without hyphens. According to best practices for Domain names published by Moz "use of hyphens also correlates highly with spammy behavior".

It is also a good practice to avoid uncommon TLD's like .info, .cc, .ws and .name which are also regarded as spam indicators.

Canonical Issues

Ideally there should be only one URL for each page in the website.

In case a CMS is being used it should be checked that two or more urls are not displaying the same content.

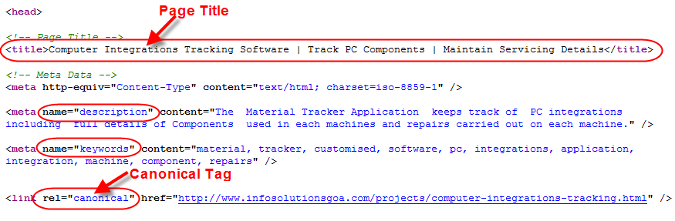

If this is the case it should be resolved by using the canonical link tag.

For example:

<link rel="canonical" href="http://www.infosolutionsgoa.com/projects/computer-integrations-tracking.html" />

The canonical tag specifies the preferred url from among the group of urls that display the same or nearly same content.

Websites having multiple copies of the same content on many urls could be penalised for duplicate content.

![]()

Below is a screenshot displaying the description, keywords and canonical tags along with the title tag.

Make sure that the canonical tag points to the correct version of the page that you want to be indexed. Also check that every page does not point to the home page.

There is also the www and non-www versions of the website in nearly every case, as by default the server is configured to allow access both ways. This can be resolved by using a non-www to www redirect.

RewriteEngine On

RewriteCond %{HTTP_HOST} ^mywebsite\.com [NC]

RewriteRule (.*) http://www.mywebsite.com/$1 [L,R=301]

Mobile Friendly Website

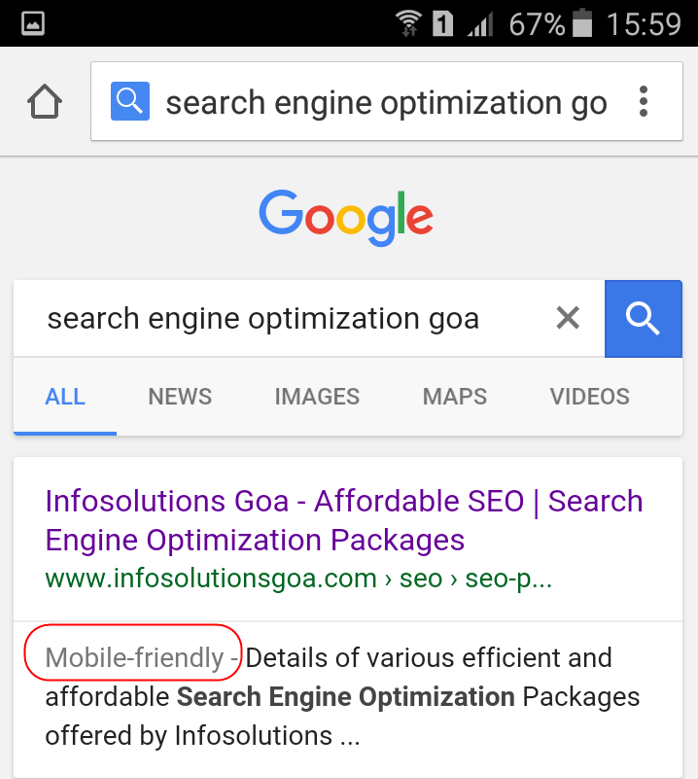

Today visitors from mobile devices constitute about 65% of the total visitors for an e-commerce website and over 35% for other websites. Taking this into account, Google has released in April 2016 a new mobile-friendly ranking algorithm popularly called "Mobilegeddon" which is designed to give a boost to mobile friendly web pages in Google's mobile search results. If you conduct a search in a mobile device it attaches a "Mobile Friendly" tag to websites that display properly on mobile devices.

Though there are various options to make your website mobile friendly, the best is using responsive design. According to Google's definition:"Responsive web design (RWD) is a setup where the server always sends the same HTML code to all devices and CSS is used to alter the rendering of the page on the device."

Responsive Web Design is one where the website adjusts itself to fit in the display size of all types of devices and makes your web page look good on a wide range of devices from desktops computers to mobile phones and also tablets. It provides an optimal viewing, easy reading, navigation and interaction experience with a minimum of resizing, panning, and scrolling.

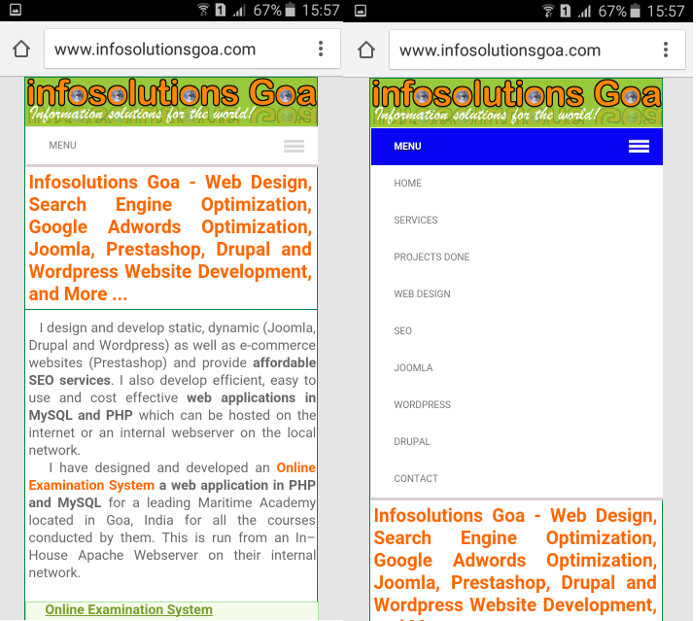

There are various frameworks available to implement Responsive Web Design like Bootstrap, Foundation and Skeleton. In the screenshots below you can view how my website displays on a mobile phone.

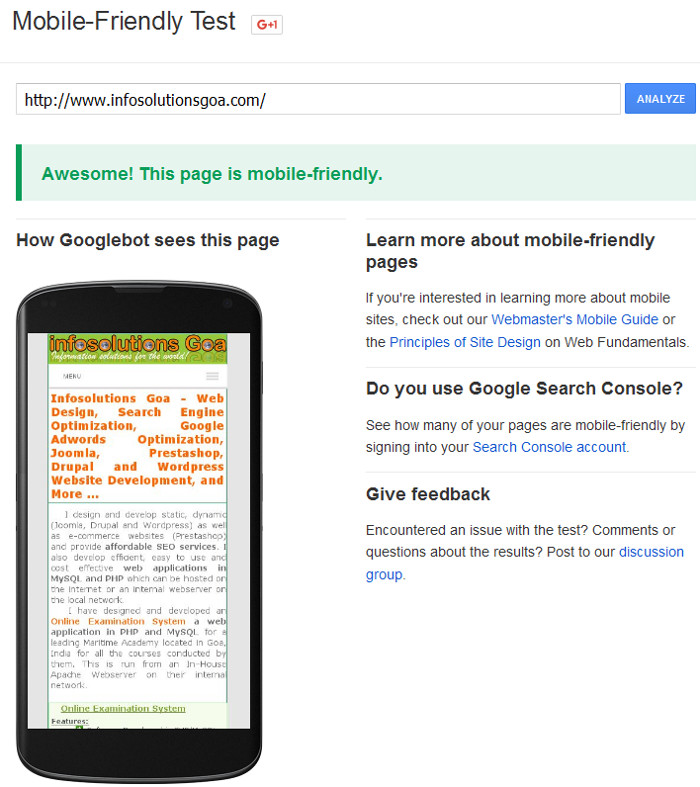

Checking if a Website is Mobile Friendly

In case you want to check if your website is mobile friendly, Google as well as Bing provide a Mobile friendly testing tool. Here's how my website looks when tested in Google's Mobile Friendly test tool.

Title and Description Meta Tag

The title is the most important On-Page SEO factor. The title has to be between 55 to 65 characters, but I have seen that much larger title lengths of upto 73 characters are being displayed in mobile searches. The title has to reflect accurately the content present on the page and contain keywords, if appropriate. All pages of your website should have unique title tags.

The description meta tag has to a brief summary of the content present and should be such that it should help with the CTR (Click through rate) as it is usually displayed below the title in the search result.

Image ALT Tags

Every image must have an ALT tag that accurately describes the image. You should also include keywords in the ALT tag if they are relevant to the image. Image file names should also be descriptive and can be upto 4 short words separated by dashes (‐)

Robots Meta Tag

Check whether the Robots meta tag are not blocking any page that has to be indexed.

For example, if your page is permitted to be crawled (Which is the default), the Robots meta tag that has to be present is:

< meta name="robots" content="index, follow" />

Note that your page will be crawled and indexed if the Robots meta tag is absent. You have to check if noindex and/or nofollow is present and check whether it is required to be present or has been put by mistake.

Keyword Mapping

Another important point in an SEO Audit is keyword mapping. This means that we have to see which pages are optimized for which keywords. We have also to uncover if multiple pages are optimized for the same keywords.

Content Review

During the content review, check if all pages have unique content and there is no duplication among the pages within the website.

Also check if there is no duplication of pages outside the website. you can check this using tools available online for checking duplicate content. Another quick way of checking is taking several unique sentences from the web page and cheking them one by one in Google.

In case you are offering products or services at various locations do not have the same content in tens or hundreds of location pages just by changing the location and keeping the content identical e.g. Web Design Washington, Web Design LA, Web Design Seattle etc

Also refer to canonical issues described earlier, the same content has to be accessed by only one URL. If this is not possible then you MUST USE the canonical link tag. In case you are using a CMS like Wordpress use noindex, follow on your Robots meta tag for all Category pages. To get an idea of the implementation of some of the best practices regarding content refer to Denmark E-Commerce Company Case Study.

Google Content Quality Guidelines

Google has clearly stated in their guidance to building high quality sites that low-quality content on some parts of a website can impact the rankings of the whole site, and thus removing low quality pages, merging or improving the content of individual shallow pages into more useful pages can help to increase the rankings of your higher quality pages.

Check Landing Pages and Page Views

The Landing page report as well as the page views report in Google Analytics will give you an idea of the content quality of your site. You will have to seriously look at content with no page views in a 6 month period.

About The Author

Joseph de Souza is a leading Freelance SEO consultant and Web developer in Goa, India with more than 15 years of experience in internet marketing.

He has a proven track record and has has helped several companies increase their traffic many times over and thereby increase their revenue and profits.

Besides English, Joseph has also successfully optimized two German language websites and a Danish language website and obtained outstanding results.